Agents Are Hiring Humans. Who Is Securing the Them?

5 February 2026

5 February 2026

5 February 2026

5 February 2026

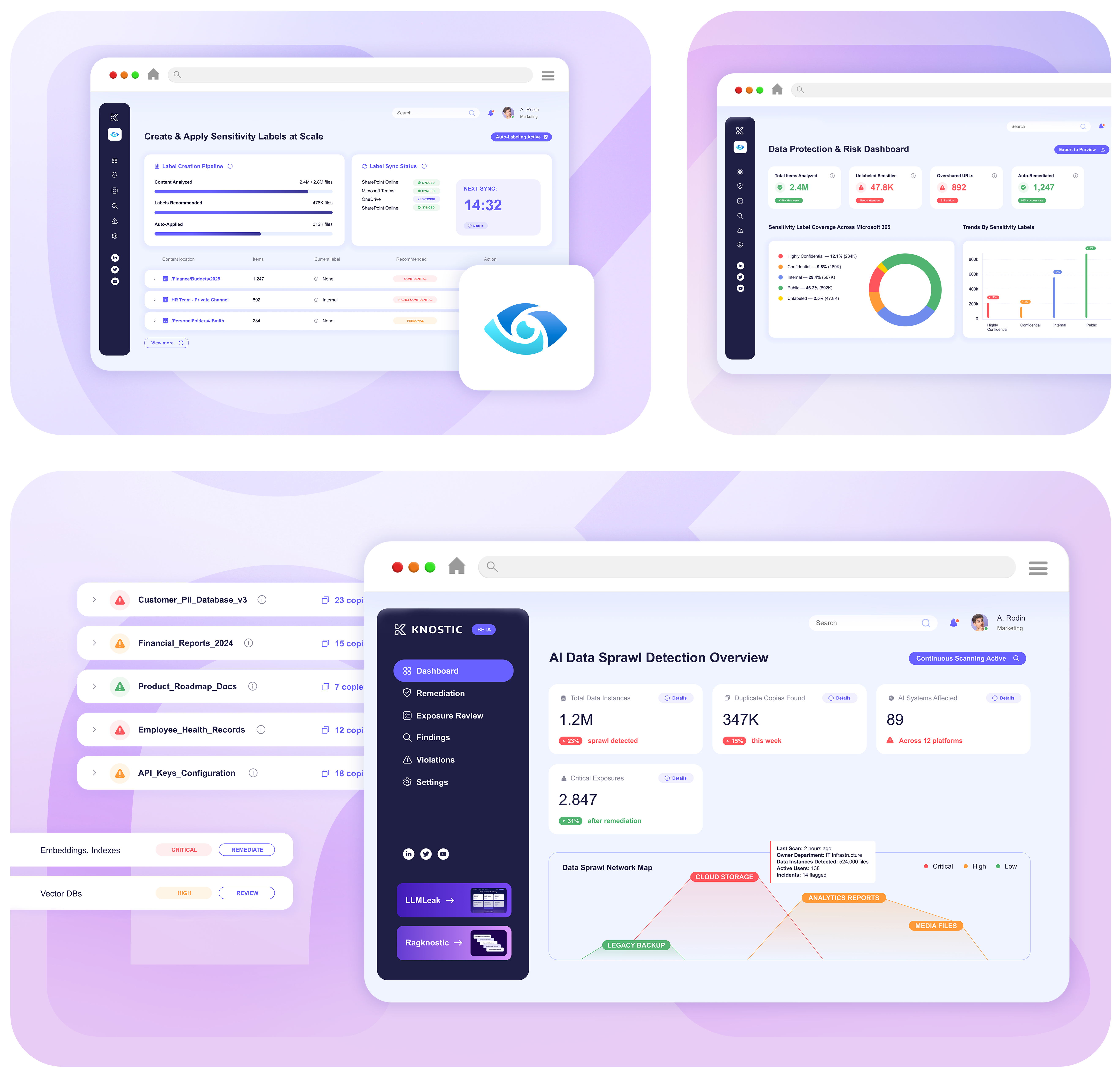

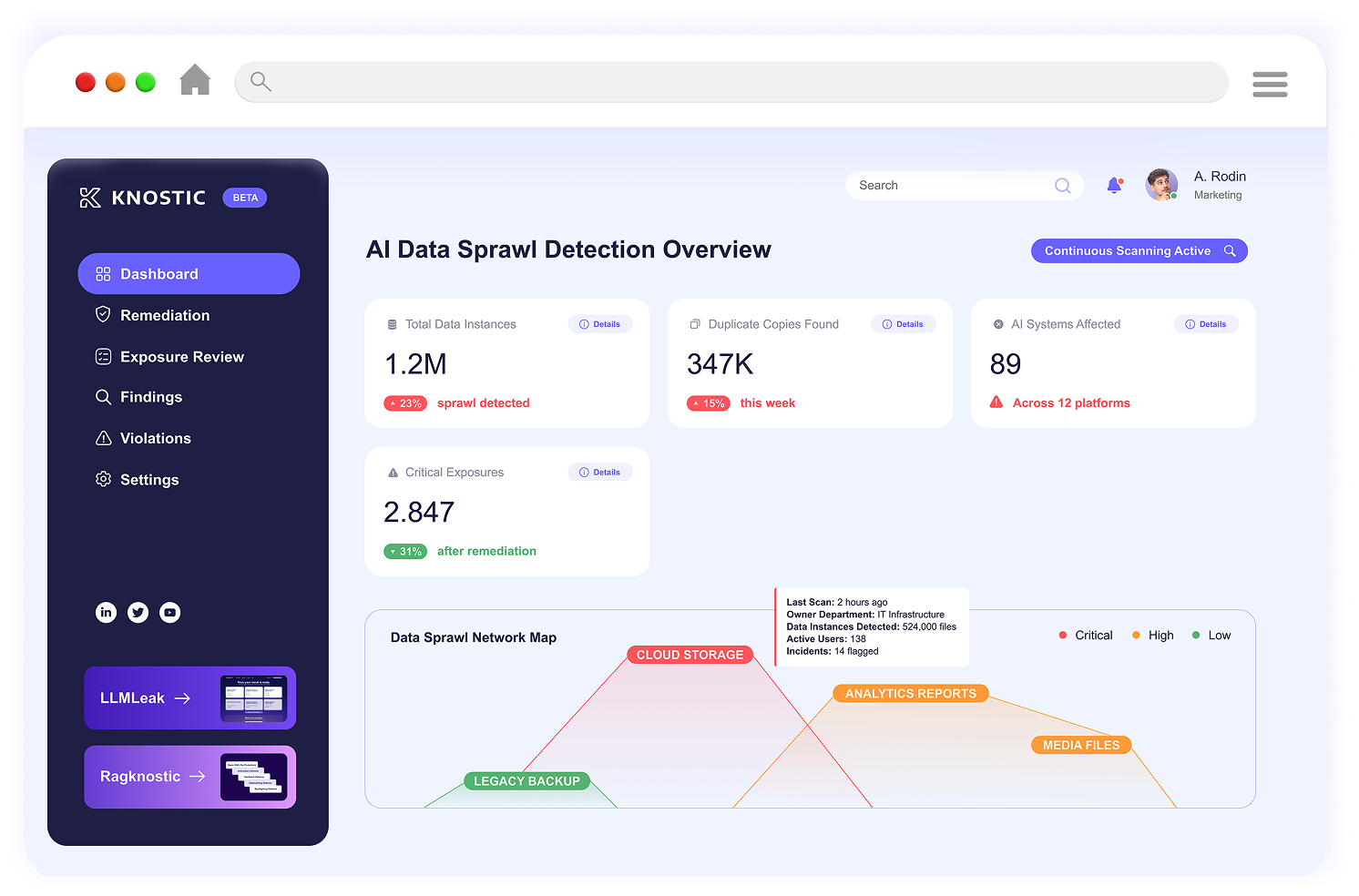

Keep data secure and compliant with a smarter way to prevent oversharing, automate labeling, and block AI-driven leaks.

Knostic maps permissions and detects excessive access so AI assistants only surface information each user is meant to see.

Knostic’s Prompt Gateway inspects prompts and responses in real time, blocking secrets, PII, and proprietary code before they leave.

Prompt Gateway detects and sanitizes manipulative inputs instantly, protecting AI models and applications from hijacking or data exfiltration.

Discover and control sensitive data across embeddings, indexes, and SaaS exports.

Learn more

Knostic analyzes real usage patterns to apply accurate sensitivity labels, accelerating DLP and compliance programs.

AI Data Governance maps permissions and user roles, enforcing need-to-know policies so AI assistants only surface content appropriate for each user.

Yes. Prompt Gateway inspects prompts and responses in real time, blocking secrets, PII, and proprietary code before it leaves.

Prompt Gateway detects and sanitizes malicious prompts instantly, stopping attackers from hijacking AI models or exfiltrating data.

AI-driven analysis maps sensitive data across embeddings, indexes, and SaaS exports, ranking exposures by business and compliance impact.

AI Data Governance applies accurate sensitivity labels based on real usage patterns, giving DLP and governance tools the context to enforce policy effectively.

No. Our suite integrates with existing platforms and enforces guardrails in real time, enabling safe AI adoption without disrupting workflows.

Knostic prevents oversharing, stops leaks to public LLMs, blocks prompt injection, and automates governance. Your organization can adopt AI with confidence.

United States

205 Van Buren St,

Herndon, VA 20170

Get the latest research, tools, and expert insights from Knostic.