How to Build a Shadow AI Detection Program in 90 Days

26 January 2026

26 January 2026

21 January 2026

20 January 2026

19 January 2026

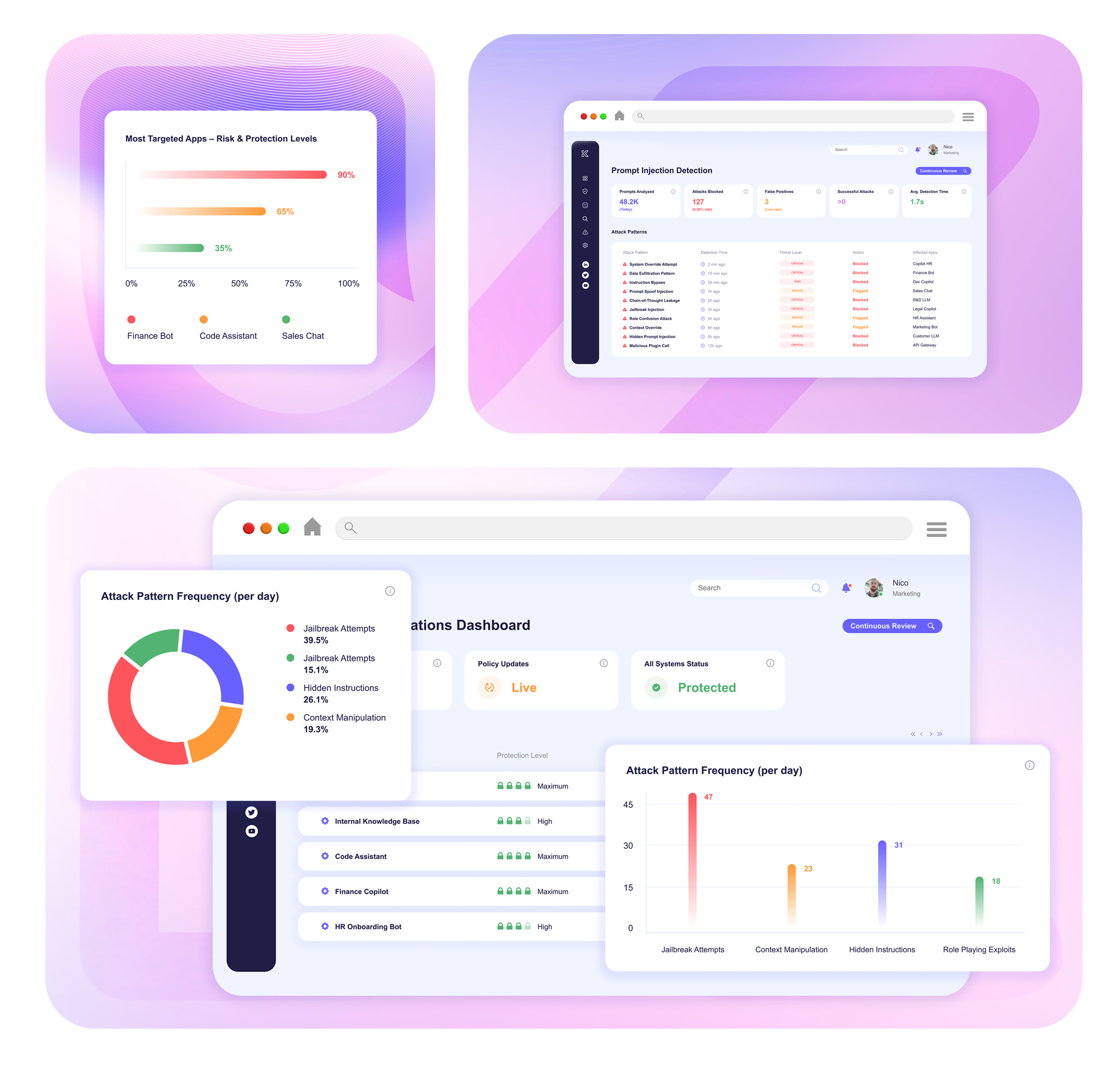

Prevent malicious prompts from hijacking your AI. Knostic stops leaks, fraud, and loss of reputation in real time.

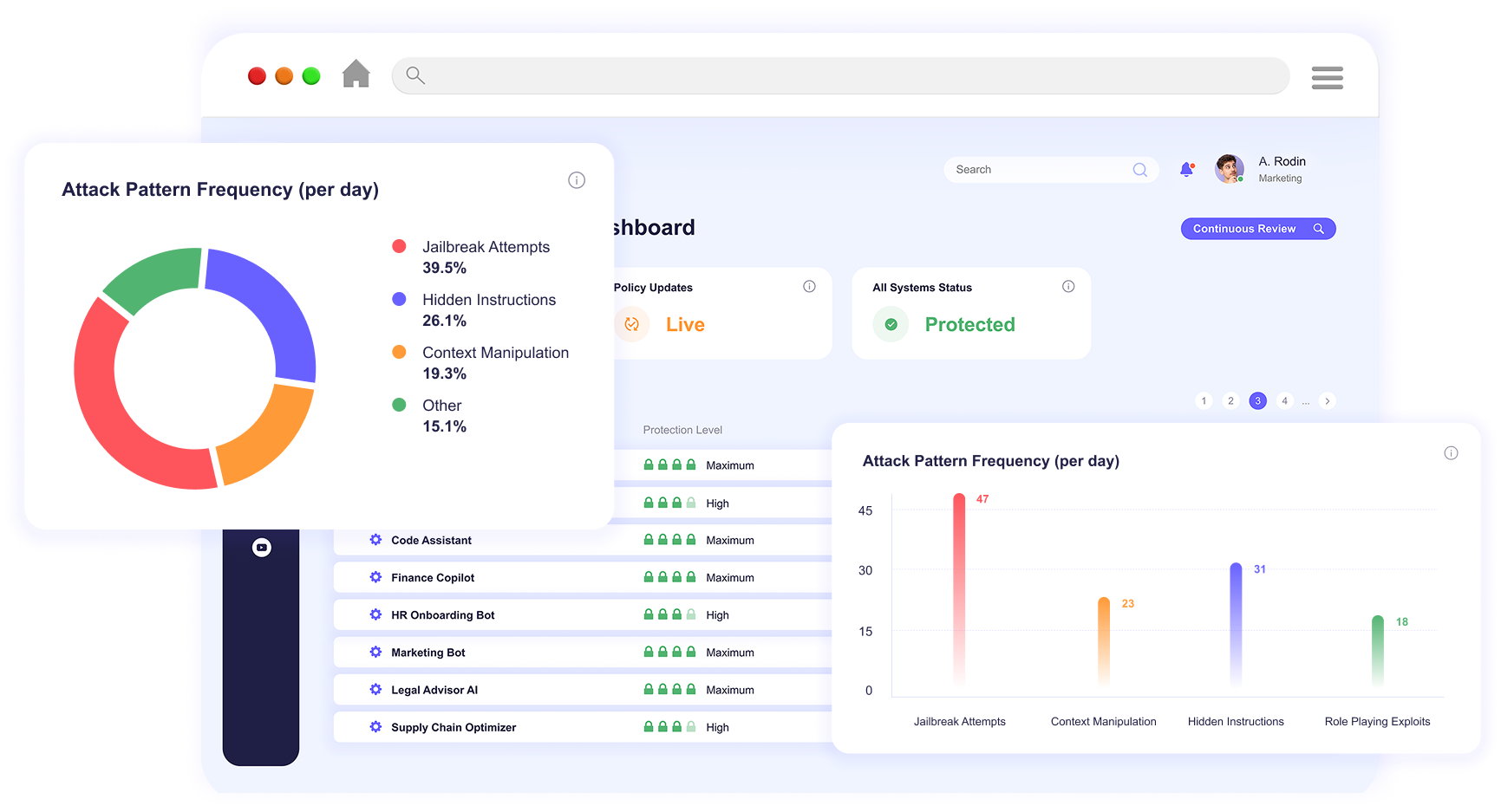

Knostic analyzes every prompt as it’s submitted, spotting manipulation attempts, like hidden instructions or jailbreaks, before they can cause harm

Suspicious inputs are automatically blocked or cleaned, preventing data exfiltration and unauthorized actions without disrupting valid queries

Knostic protects against jailbreaks, denial-of-wallet exploits, and hidden instructions, ensuring your AI applications stay secure and compliant

Analyze and filter prompts before they reach the model

Block or clean malicious prompts in real time

Protect against jailbreaks, hidden instructions, and denial-of-wallet attacks

Tune sensitivity and enforcement for each application or model

Maintain detailed records for incident response and compliance

A crafted prompt designed to override system instructions, exfiltrate sensitive data, or trigger harmful actions.

Because they rely on static filters or network firewalls, which cannot parse evolving natural-language manipulations or jailbreak attempts.

By inspecting prompts and responses in real time, detecting manipulation attempts, and applying adaptive policies tuned to each application.

No. Policies can be tuned to balance filtering sensitivity with usability, minimizing false positives.

Absolutely. Security teams can tune detection sensitivity and choose to block, log, or sanitize suspicious prompts based on context.

Knostic supports standalone LLM deployments, multi-agent frameworks, and API/gateway integrations.

Knostic keeps your AI applications secure and compliant by inspecting prompts and responses in real time, blocking malicious inputs, and defending against jailbreaks and hidden instructions.

United States

205 Van Buren St,

Herndon, VA 20170

Get the latest research, tools, and expert insights from Knostic.