Free LLM-based Vulnerability Scans for Open Source Projects

2 March 2026

5 February 2026

5 February 2026

Roles

Knowledge Security Platform for Red Teams & Pen Testers | Knostic

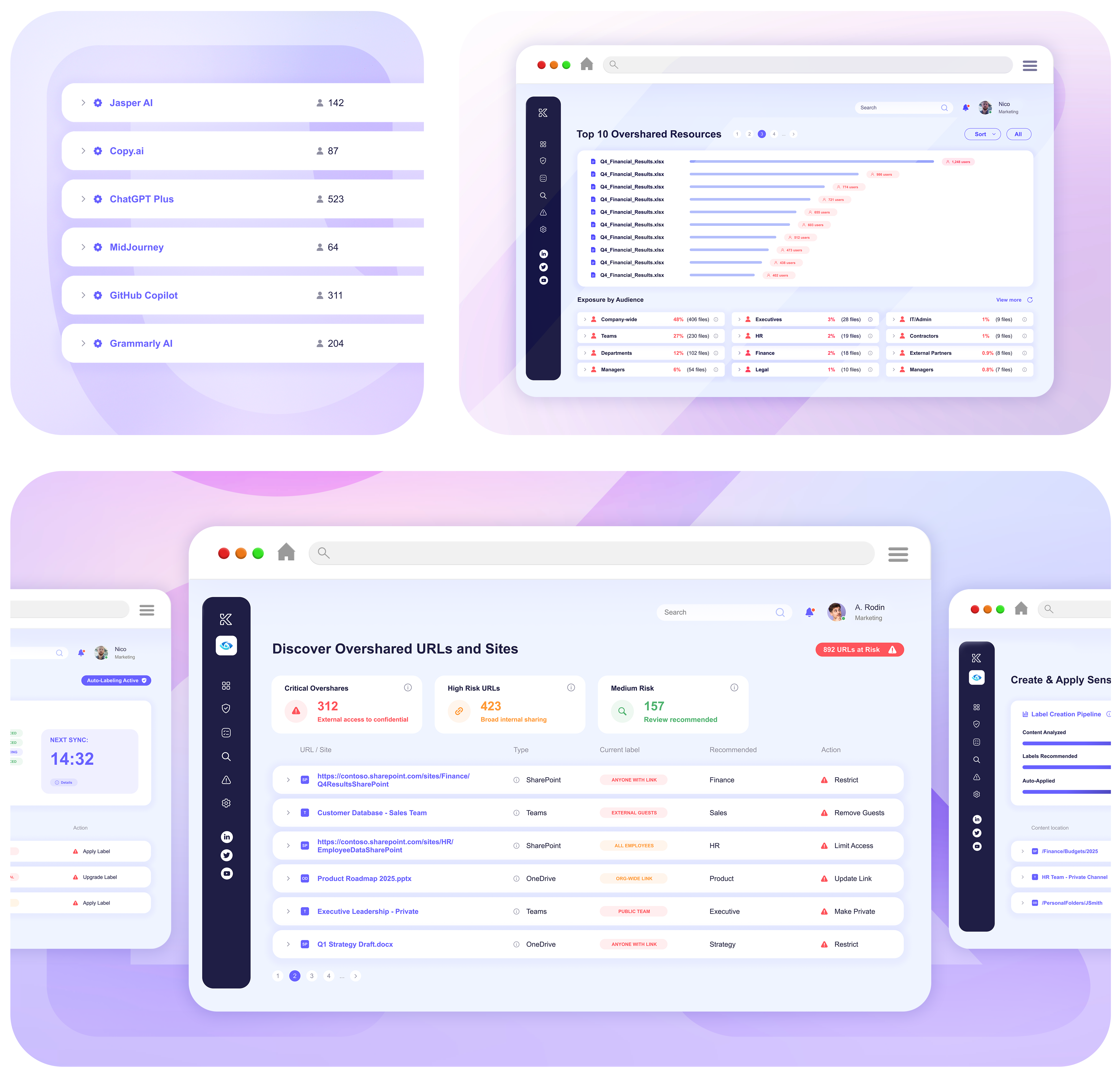

Knostic gives you the tools to simulate adversarial techniques and uncover risks before they become breaches.

and jailbreaks to test how copilots and agents handle adversarial input.

Ever wonder what your Copilot or internal LLM might accidentally reveal? We help you test for real-world oversharing risks with role-specific prompts that mimic real workplace questions.

RAG Security Training Simulator is a free, interactive web app that teaches you how to defend AI systems — especially those using Retrieval-Augmented Generation (RAG) — from prompt injection attacks.

Surface hidden AI threats before adversaries do. Turn findings into additional funding for fixes.

Knostic helps red teams uncover vulnerabilities so organizations can harden defenses before real attacks hit

United States

205 Van Buren St,

Herndon, VA 20170

Get the latest research, tools, and expert insights from Knostic.