Agents Are Hiring Humans. Who Is Securing the Them?

5 February 2026

5 February 2026

5 February 2026

5 February 2026

AI copilots can expose hidden data. Knostic provides visibility and control to prevent leaks.

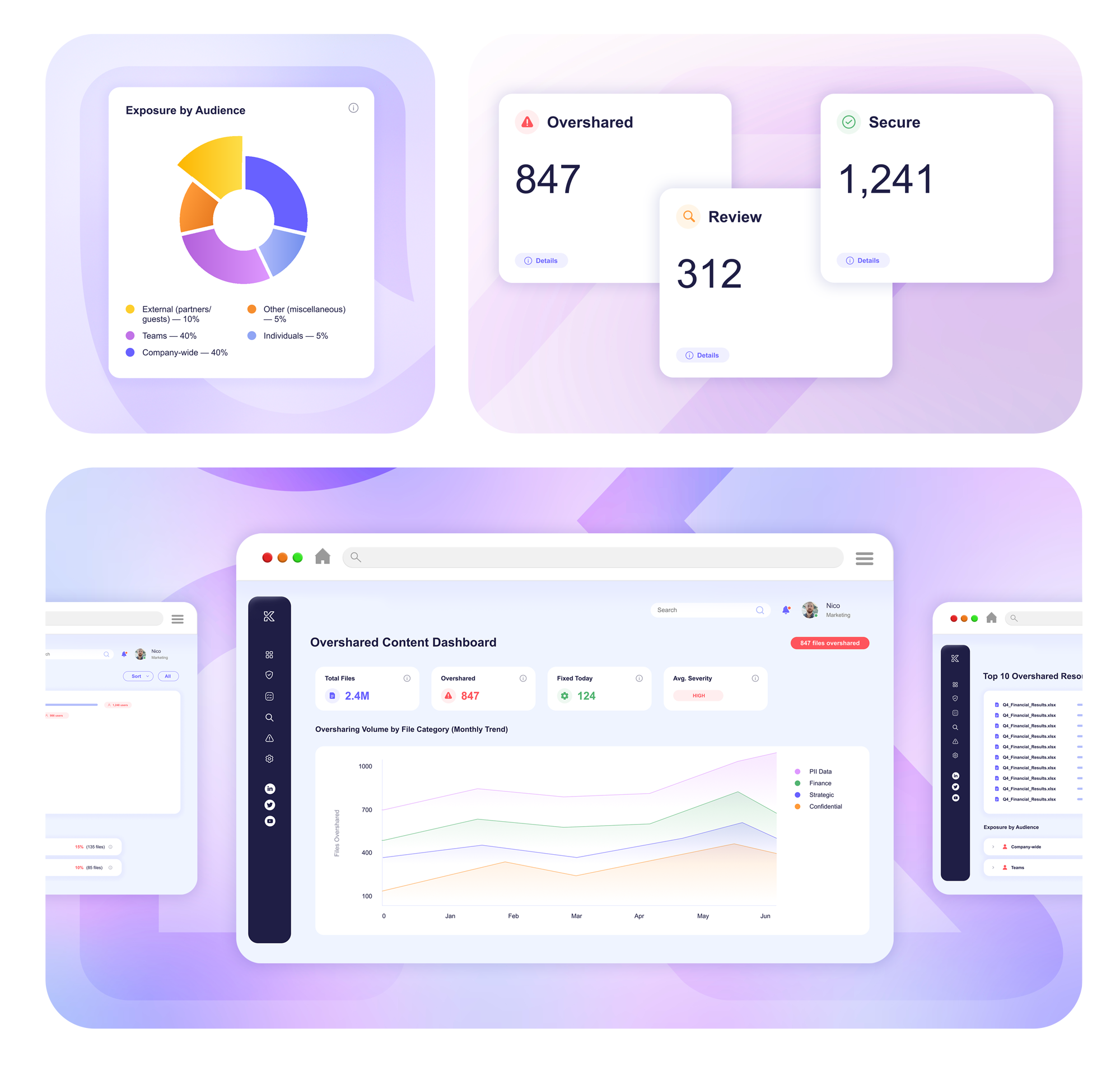

Knostic maps permissions and user roles to identify documents, conversations, and data exposed beyond business need, before AI assistants can surface them.

Knostic builds a unified graph of access relationships, highlighting excessive permissions and ranking the riskiest exposures for fast remediation.

Knostic applies or recommends sensitivity labels and tightens permissions, ensuring access aligns with organizational policy and regulatory requirements.

Identify overshared files, conversations, and indexes across repositories and AI tools

Visualize file permissions, group memberships, and inherited access

Compare actual access against least-privilege baselines for each role

Show how overshared content could flow into AI training or retrieval systems

Apply sensitivity labels and right-size permissions with guided, policy-based fixes

Detect and alert on new oversharing or policy drift in real time

Because any accessible file can surface in responses to everyday queries.

By mapping permissions across repositories, building access graphs, and comparing them against persona-based least-privilege models.

Knostic shows how overshared data could flow into AI systems through embeddings, indexes, or RAG.

Knostic identifies not only directly accessible sensitive content but also where data points could be combined by AI to infer confidential insights.

Findings are prioritized and include step-by-step fixes, such as revoking access links, tightening memberships, or relabeling sensitive files.

Auditable reports and continuous monitoring help meet GDPR, HIPAA, and AI Act requirements by proving sensitive data is governed properly.

Absolutely. Knostic enhances Purview and other DLP platforms by feeding them precise labeling and remediation actions.

Knostic uncovers sensitive content exposed beyond business need, prioritizes risks, and provides guided remediation.

United States

205 Van Buren St,

Herndon, VA 20170

Get the latest research, tools, and expert insights from Knostic.