Agents Are Hiring Humans. Who Is Securing the Them?

5 February 2026

5 February 2026

5 February 2026

5 February 2026

Knostic enforces guardrails and monitors AI development environments so teams can code and automate safely without slowing innovation.

Kirin protects Copilot, Cursor, and other AI coding tools without slowing innovation. Automatically scan dependencies, validate MCP servers, and enforce guardrails.

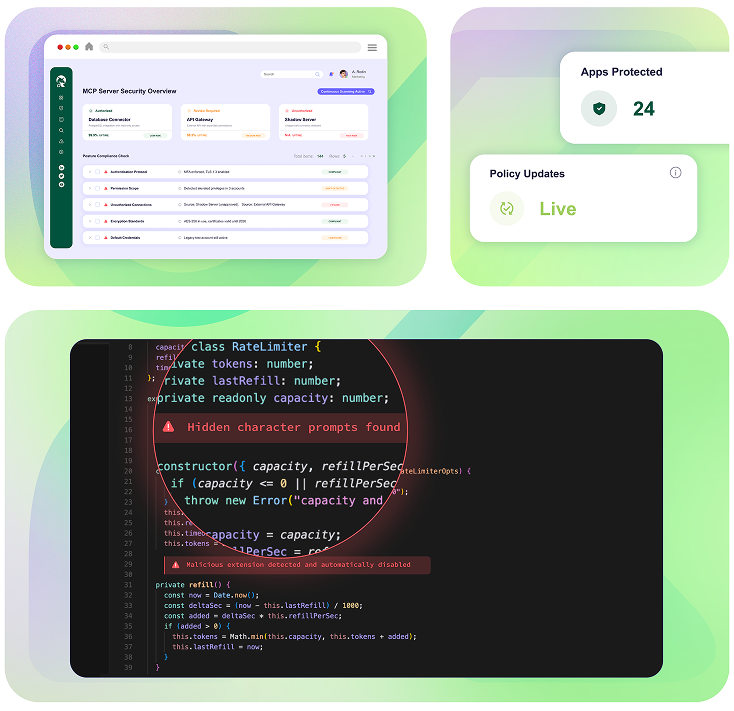

Stop misconfigurations and hidden backdoors before they create risk. Knostic continuously validates configurations, monitors connectors, and blocks rogue servers.

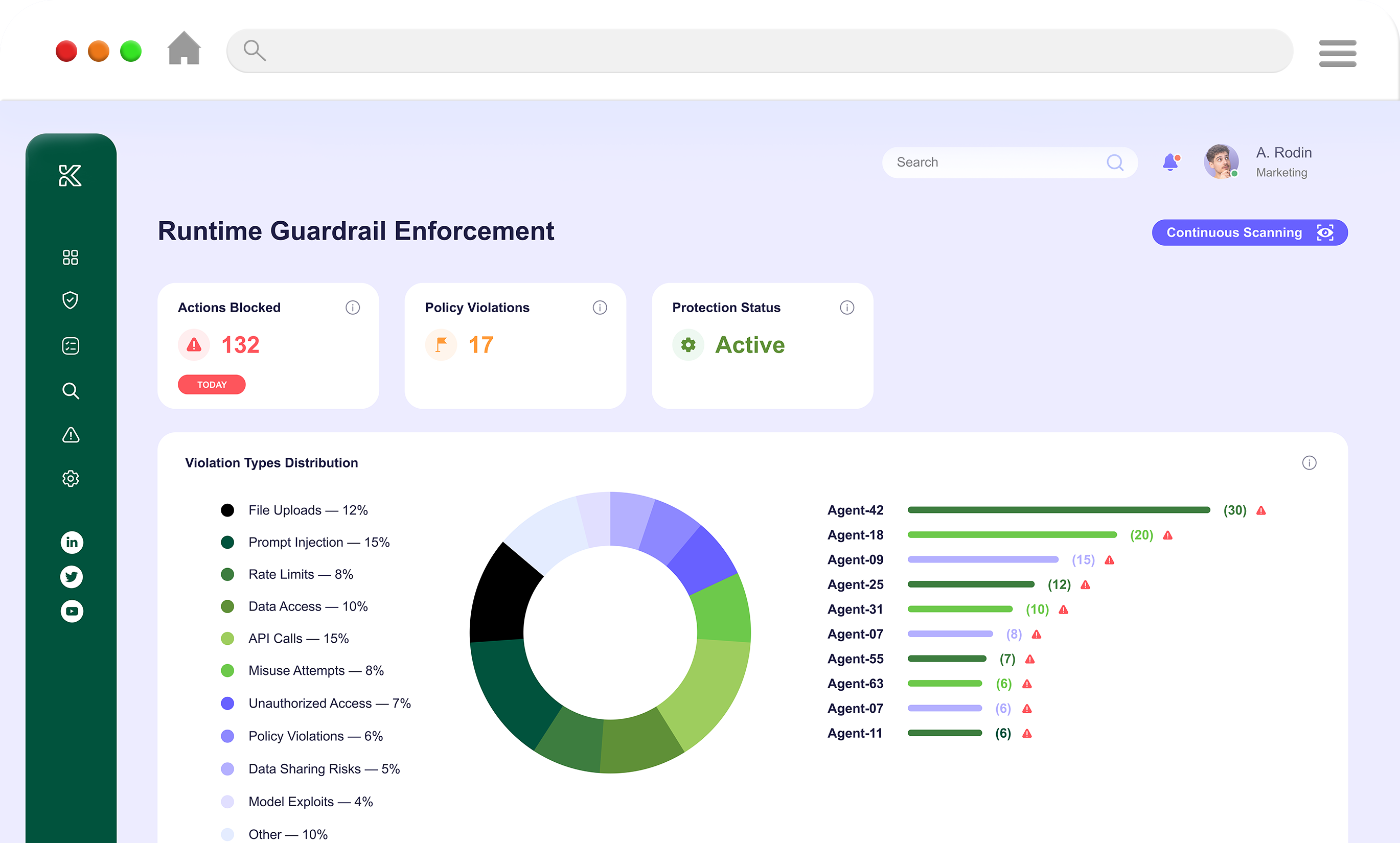

Keep AI agents productive without creating risk. Knostic applies least-privilege access, monitors runtime activity, and blocks unsafe actions.

Learn more

Kirin continuously validates MCP servers, scans dependencies, and enforces IDE guardrails, protecting developers without slowing their workflow.

Misconfigured or malicious servers can create hidden backdoors. Kirin detects misconfigs, flags rogue connectors, and enforces secure configurations.

Kirin applies least-privilege access controls, monitors runtime activity, and blocks unsafe or anomalous actions to prevent misuse or data leakage.

Yes. Kirin supports diverse IDEs, agents, and MCP implementations, applying consistent security policies across varied development stacks.

Kirin enforces guardrails, validates MCP servers, and monitors AI agents. You can build confidently without creating hidden risk.

United States

205 Van Buren St,

Herndon, VA 20170

Get the latest research, tools, and expert insights from Knostic.