Agents Are Hiring Humans. Who Is Securing the Them?

5 February 2026

5 February 2026

5 February 2026

5 February 2026

Knostic simulates, detects, and prevents knowledge exposure before AI deployment. Get certainty your enterprise data stays secure.

Deploying copilots without proper controls opens your organization to critical vulnerabilities that traditional security tools miss.

AI assistants expose confidential information across organizational silos, bypassing existing permissions.

Outdated documents and deprecated policies create compliance gaps and misinformation risks.

Security controls degrade over time as data governance rules become inconsistent and outdated.

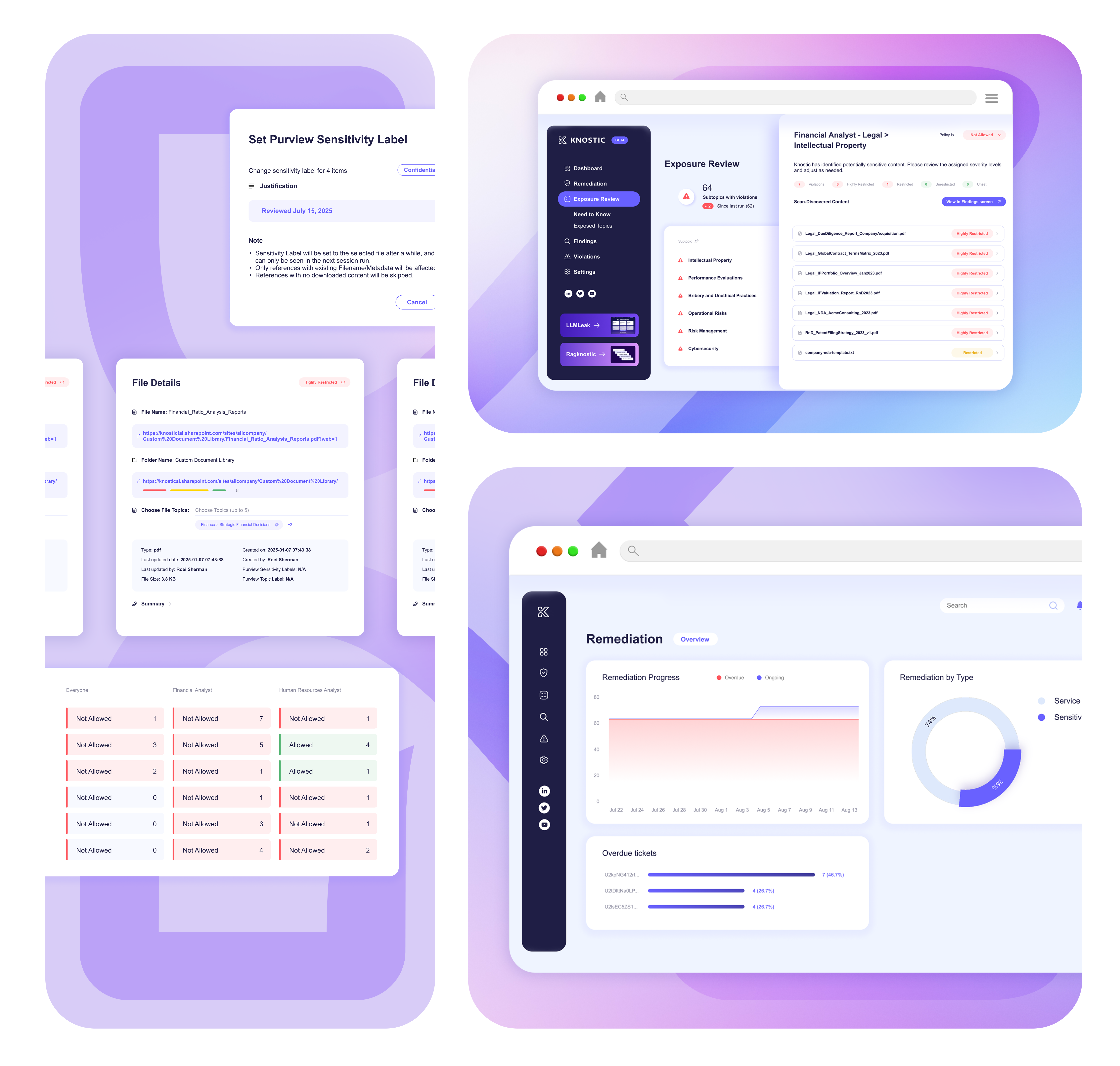

Simulate AI behavior to identify knowledge-level vulnerabilities before deployment.

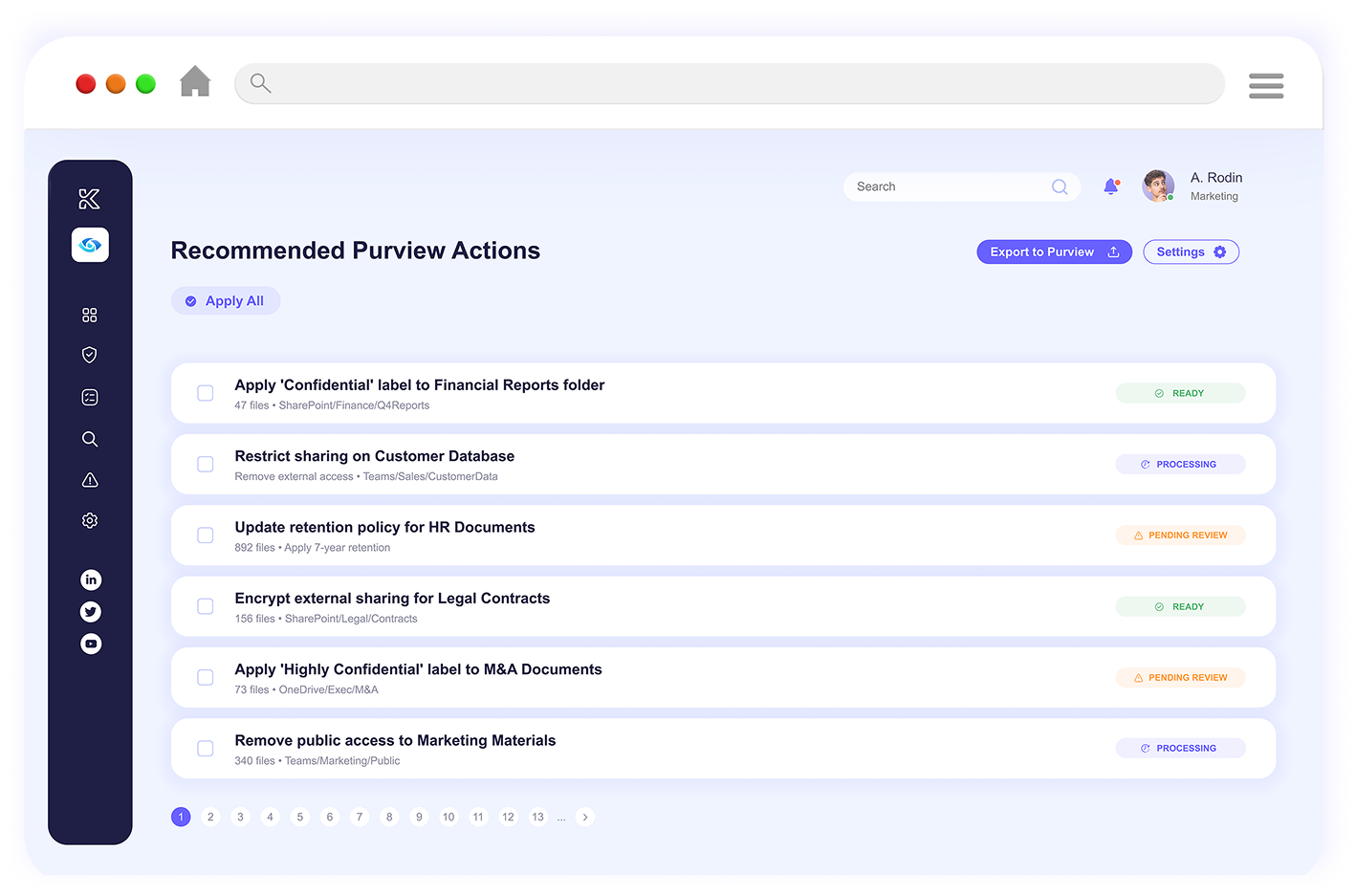

Implement role-based access policies and automated labeling systems.

Real-time detection of policy drift and emerging exposure risks.

Executive dashboards and audit trails demonstrate compliance and ROI.

Knostic tests over 20 prompt patterns per user persona, revealing hidden oversharing paths that traditional tools miss.

Identifies inference-based exposures where AI combines data inappropriately across security boundaries.

Tests each user persona against realistic scenarios to predict actual AI behavior patterns.

Provides 0-100 readiness scores with specific recommendations for improvement.

Automated Policy Implementation - deploy role-based controls and sensitivity labeling without complex configuration or coding requirements.

Continuous surveillance detects policy drift and new vulnerabilities as your data environment evolves.

Board-ready dashboards translate technical metrics into business impact, showing compliance status and risk reduction.

Don't wait for a security incident. Get your personalized AI readiness score and actionable recommendations in minutes.

Leading enterprises trust Knostic to secure their AI initiatives while enabling innovation.

Discover your organization's AI exposure level with our comprehensive diagnostic tool. No commitment required.

Get clarity on the most pressing concerns IT leaders face when deploying enterprise

AI assistants.

Knostic prevents AI from accessing conflicting or outdated information sources that create inconsistent responses.

Our simulation engine validates that security controls work as intended before users interact with AI.

Role-based controls ensure AI assistants respect organizational boundaries and data classification levels.

Knostic is the comprehensive impartial solution to stop data leakage.

United States

205 Van Buren St,

Herndon, VA 20170

Get the latest research, tools, and expert insights from Knostic.