How We Compared Aim Security and Prompt Security

In December 2025, we evaluated Aim Security vs. Prompt Security using the most current publicly available product documentation, and we mapped documented features to independent industry benchmarks. To ground “Shadow AI” risk, we used IBM’s definition: “unsanctioned use of AI tools without formal IT oversight”. Then, we applied the same internal, repeatable evaluation framework used for all platform reviews.

This comparison uses four evaluation categories: AI assistant security, AI coding safety, GRC, and AI attack simulation. These four align with Cisco’s AI Readiness Index themes and show that only 32% report high data readiness. We also reviewed how each platform supports data protection in light of IBM’s Cost of a Data Breach Report 2025 findings that show a $4.4 million global average breach cost, to gauge whether controls translate into measurable risk reduction. We included Knostic in this analysis as a leading tool that addresses these same categories, to provide context alongside Aim Security and Prompt Security.

Aim Security vs. Prompt Security vs. Knostic

To align the comparison with enterprise risk, governance, and security leadership priorities, the capability categories below are structured around four domains:

- AI Assistant Security

- AI Coding Safety / Secure AI-Assisted Development

- Governance, Risk, and Compliance (GRC)

- AI Attack Simulation

This structure reflects how CISOs, DPOs, and compliance leaders evaluate AI platforms in practice, beyond surface-level controls, focusing on auditability, executive oversight, regulatory readiness, and pre-adoption risk assessment. Capability descriptions are based on publicly available documentation as of November-December 2025, with Knostic evaluations combining public materials and hands-on assessment where noted.

To ensure neutrality, our capability descriptions are based on publicly available documentation as of November 2025. Where direct testing is an informed evaluation, we have noted this.

Main Takeaways

While both Aim Security and Prompt Security offer valuable protections for AI systems, their coverage remains narrowly focused. The following comparison highlights where each platform excels and where it falls short across four key AI security domains:

- Aim Security and Prompt Security each address essential aspects of AI security, but neither fully covers all four critical domains: Shadow AI governance, AI-assistant security, prompt-layer control, and AI-coding safety.

- Aim Security is strong at runtime protection and AI-agent firewalling, which may help for application-level AI deployments. However, its public documentation lacks clear emphasis on broad shadow-AI discovery and on developer-side code-assistant safety, which limits its ability to support enterprise-wide visibility.

- Prompt Security provides strong protection for LLM-based applications and code assistants, and may protect prompts and model outputs. But its publicly available materials do not describe comprehensive enterprise-wide Shadow AI governance or oversight across all AI interactions.

- Most platforms specialize in narrow domains such as runtime filtering, prompt protection, or developer security, rather than governance across all AI layers. This comparison, therefore, reflects verifiable capability scopes rather than assuming broader coverage.

- Knostic addresses these gaps by providing coverage of Shadow AI discovery, prompt-layer controls, assistant-level governance, and AI-coding safety within a single continuous model.

- For organizations adopting multiple AI tools (assistants, code tools, custom apps), and needing data-sensitive compliance, developer safety, and governance visibility, Knostic provides the broadest end-to-end alignment among the platforms evaluated.

- Suppose you want AI security that scales with your entire AI supply chain (from code to prompts to deployment to runtime). In that case, choosing Knostic will reduce operational overheads and minimize gaps caused by using multiple point solutions.

Viewed through a governance-first lens, the differences between Aim Security, Prompt Security, and Knostic become clearer. Aim Security and Prompt Security primarily address specific technical control surfaces, runtime execution, and LLM pipelines, respectively, while Knostic extends into enterprise risk management, compliance oversight, and board-relevant governance. This distinction matters for organizations that must demonstrate auditability, regulatory readiness, and executive accountability as AI adoption scales.

Why Companies Pick Knostic

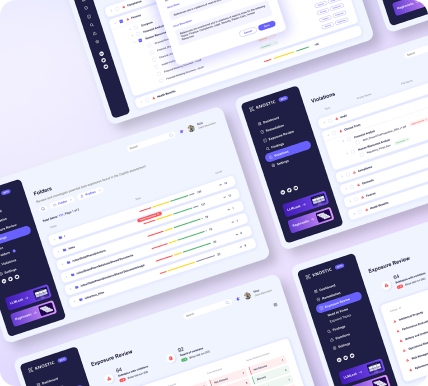

Companies choose Knostic because it offers end-to-end AI security that covers shadow AI, prompt-level controls, assistant/agent safety, and AI-coding security all in one platform. Many existing tools add one or two of those capabilities, but Knostic integrates them into a single governance model. Importantly, this integration is designed to support enterprise risk management, auditability, and executive oversight, not just technical enforcement, aligning AI adoption with compliance, governance, and board-level accountability.

Knostic’s approach helps organizations manage AI risk without fragmenting their security stack. The platform enables continuous visibility, policy enforcement, and auditability, which are essential as AI tools proliferate across departments. Because Knostic adapts to different use cases (data, assistants, code, agents, etc.), it works both for developers and security/compliance teams. This broad coverage reduces the need to maintain multiple disparate tools and lowers operational burden. For companies seeking scalable AI adoption with strong security, Knostic is often the preferred choice over niche or partial-coverage solutions.

Visibility and Control Over AI Use

Knostic provides comprehensive visibility and control across enterprise AI usage through its AI-first platform. Kirin secures AI coding assistants by monitoring developers' IDEs in real-time, detecting malicious MCP servers, unsafe extensions, and credential-harvesting attacks before they execute. It also identifies shadow AI usage by scanning for unauthorized AI tools within browsers and IDEs, giving security teams complete visibility into where users are leveraging AI. It delivers audit trails of threats blocked and shadow AI detected, centralized policy management, and actionable alerts when AI tools exhibit risky behavior.

For enterprise AI search tools like Microsoft Copilot, Glean, and Gemini, Knostic's AI Assistant Security solution audits where sensitive information can be exposed through AI interactions, then establishes intelligent boundaries so AI systems respect data permissions and access controls. Unlike traditional security tools, it provides specialized monitoring built for the unique inference and exposure patterns created by large language models.

The platform continuously monitors usage as employees adopt new tools, ensuring governance keeps pace with evolving AI adoption. Risk scoring and prioritization highlight critical exposures first, helping teams focus remediation where it matters most. These insights support executive reporting, insider risk validation, regulatory readiness, and AI exposure mapping during audits or M&A due diligence.

The capabilities work through native connectors for development environments like VS Code and Cursor, browser activity monitoring, as well as M365, Copilot, Glean, and other enterprise systems. Optional integrations with SIEMs or additional APIs can deepen monitoring as needed.

Stopping AI Assistants from Saying What They Shouldn't

Knostic's AI Assistant Security solution enforces intelligent access boundaries so AI search tools like Copilot, Glean, and Gemini access only the data users are authorized to see, nothing more. It continuously audits AI interactions to detect where sensitive information has been inappropriately exposed, then establishes controls that make AI systems respect data permissions and access policies in real time.

Knostic applies consistent security policies across enterprise AI assistants, AI coding tools, and development environments, giving control regardless of deployment type. It maintains full audit logs for compliance and incident response, enabling visibility into AI activity and threat patterns across the organization. Companies get the productivity benefits of AI assistants while maintaining security and compliance, reducing the risk of data exposure or intellectual property leaks.

Policies may be configured as static governance rules or as adaptive, context-aware controls, depending on the enterprise's deployment. Knostic supports oversight for RAG-based AI systems by assessing knowledge exposure patterns and identifying where AI assistants can access or infer sensitive information beyond intended boundaries.

Protecting Sensitive Data from Prompts

Knostic recognizes that data submitted to generative AI (prompts + context) often contains sensitive content. Its governance model aims to filter, mask, or block sensitive data at the knowledge and inference layer before it reaches AI models, thereby minimizing both direct and inferred data-leakage risk. It also provides real-time guardrails and policy enforcement to prevent sensitive data from being sent to AI, even in dynamic workflows or SaaS integrations.

In Knostic’s architecture, the knowledge layer sits between data repositories and the model, integrating with IAM and data-classification systems to enforce permissions before content is exposed. Because enforcement occurs here, legitimate AI use remains possible while risky prompts are flagged or blocked. This layer bridges existing IAM permissions, Purview/DLP labels, and the AI runtime, enabling Knostic to enforce role-, context-, and data-based controls without replacing existing identity or classification tooling. This balance ensures both productivity and protection, which is why many compliance-heavy organizations pick Knostic.

Defending the Whole AI Supply Chain

Knostic doesn’t treat AI security as a single point product. It secures the full AI lifecycle, from code and prompts, through assistants and agents, to runtime usage and data flows. It enforces policies consistently across all layers: coding, prompt submission, assistant behavior, and AI tool usage. Because of this coverage, companies don’t need to stitch together multiple tools or vendor solutions, reducing complexity and operational overhead. Continuous monitoring, audit logging, and risk scoring across the entire AI supply chain give teams confidence and readiness for compliance as AI scales. This model also helps maintain security across AWS, GCP, Azure, SaaS platforms such as M365 and ServiceNow, and traditional on-premises systems.

Subscribe to our blog!

.png?width=670&height=742&name=folder-with-pocket-mockup-leaned%201%20(1).png)